Background

I recently started work on a new book. The title is still to be finalised, but the subject is clear; a practical look at popular test case design techniques. In this modern age of testing, you may be wondering why such a traditional subject needs a new book and that I would be better writing about my experiences with testing in an agile environment or test automation or exploratory testing. Without doubt these are print worthy topics, but I believe that the best people at performing these tasks are those with a solid understanding of test design and it is for this reason that I wanted to first focus on this topic.

I was in two-minds about publishing sample chapters, but I decided that it was something I wanted to do, especially when I felt the chapter in question added something to the testing body of knowledge freely available on the Internet. It is also for my own sanity. Writing a book is a lengthy endeavour, with few milestones that produce a warm glow until late into the process. Sharing the occasional chapter provides an often well needed boost.

The only caveat is that the chapter below is in need of a good Copy Editor. That said, I am happy with the content and messaging so any thoughts, comments and questions are welcome. Enjoy!

Introduction

A Classification Tree is a graphical technique that allows us to combine the results of Boundary Value Analysis and Equivalence Partitioning with a more holistic view of the software we are testing, specifically the inputs we plan to interact with and the relationships that exist between them.

Whenever we create a Classification Tree it can be useful to consider its growth in 3 stages – the root, the branches and the leaves. All trees start with a single root that represents an aspect of the software we are testing. Branches are then added to place the inputs we wish to test into context, before finally applying Boundary Value Analysis or Equivalence Partitioning to our recently identified inputs. The test data generated as a result of applying Boundary Value Analysis or Equivalence Partitioning is added to the end of each branch in the form of one or more leaves.

This combination of test data with a deeper understanding of the software we are testing can help highlight test cases that we may have previously overlooked. Once complete, a Classification Tree can be used to communicate a number of related test cases. This allows us to visually see the relationships between our test cases and understand the test coverage they will achieve.

There are different ways we can create a Classification Tree, including decomposing processes, analysing hierarchical relationships and brainstorming test ideas. Over the sections that follow, we will look at each approach and see they can be used.

Process decomposition

In addition to testing software at an atomic level, it is sometimes necessary to test a series of actions that together produce one or more outputs or goals. Business processes are something that fall into this category, however, when it comes to using a process as the basis for a Classification Tree, any type of process can be used. As a result, it is useful to think of processes in a way that not only conjure images of actions performed by a business, but also the last ‘wizard’ you used as part of a desktop application and that algorithm you wrote to sort a list of data.

The majority of processes we encounter can be directly or indirectly controlled by inputs. These are the things that we like to sniff out as testers and experiment with, not just because it satisfies our natural curiosity, but because one day this particular set of inputs may be encountered by somebody whose opinion matters and if they remain unchecked, who knows what their experience may be. All that we know about these inputs is that (in some way) they affect the outcome of the process we are testing. This may not sound like much of a connection, but it is one of the more frequently used heuristics for deciding the scope of a Classification Tree. Let us look at an example.

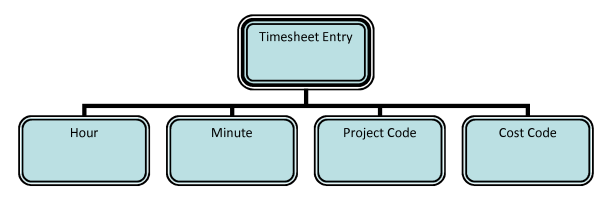

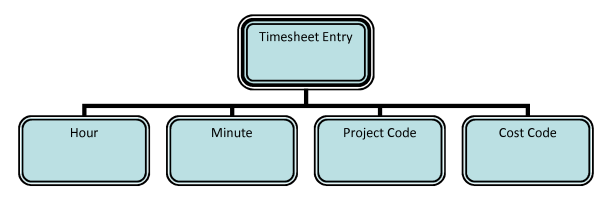

If you have ever worked in a commercial environment, you are likely to be familiar with the process of submitting an electronic timesheet. Let us assume that the purpose of this piece of testing is to check we can make a single timesheet entry. At a high level, this process involves assigning some time (input 1) against a cost codes (input 2). Based on these inputs, we now have enough information to draw the root and branches of our Classification Tree (Figure 1).

Figure 1: Classification Tree for timesheet entry (root and branches only)

Figure 1: Classification Tree for timesheet entry (root and branches only)

Whilst our initial set of branches may be perfectly adequate, there are other ways we could chose to represent our inputs. Just like other test case design techniques, we can apply the Classification Tree technique at different levels of granularity or abstraction. Imagine that as we begin to learn more about our timesheet system we discover that it exposes two inputs for submitting the time (an hour input and a minute input) and two inputs for submitting the cost code (a project code and an activity code). With our new found knowledge we could add a different set of branches to our Classification Tree (Figure 2), but only if we believe it will be to our advantage to do so. Neither of these Classification Trees is better than the other. One has more detail, upon which we can specify more precise test cases, but is greater precision what we want? Precision comes at a cost and can sometimes even hinder rather than help. What’s best for you and your project? Only you can decide.

Figure 2: Alternative Classification Tree for timesheet entry (root and branches only)

Hierarchical relationships

The Classification Trees we created for our timesheet system were relatively flat (they only had two levels – the root and a single row of branches). And whilst many Classification Trees never exceed this depth, occasions exist when we want to present our inputs in a more hierarchical way. This more structured presentation can help us organise our inputs and improve communication. It also allows us to treat different inputs at different levels of granularity so that we may focus on a specific aspect of the software we are testing. This simple technique allows us to work with slightly different versions of the same Classification Tree for different testing purposes. An example can be produced by merging our two existing Classification Trees for the timesheet system (Figure 3).

Figure 3: Classification Tree for timesheet entry (with hierarchy and mixed levels of granularity)

Figure 3: Classification Tree for timesheet entry (with hierarchy and mixed levels of granularity)

We do not necessarily need two separate Classification Trees to create a single Classification Tree of greater depth. Instead, we can work directly from the structural relationships that exist as part of the software we are testing. One of the nice things about the Classification Tree technique is that there are no strict rules for how multiple levels of branches should be used. As a result, we can take inspiration from many sources, ranging from the casual to the complex.

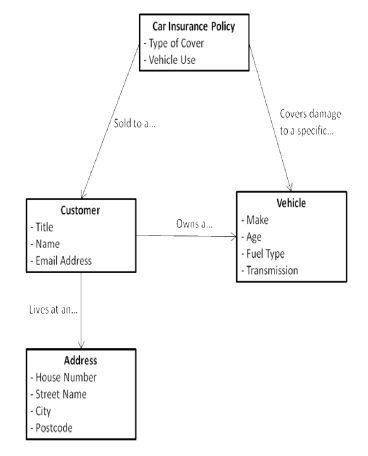

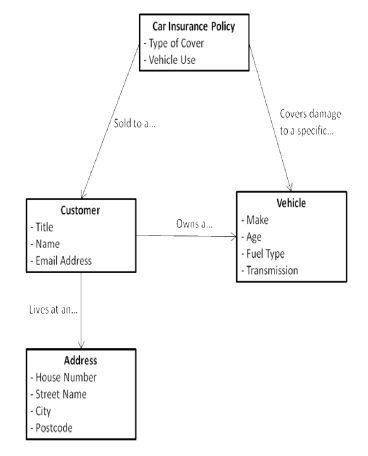

With a little digging we may find that someone has already done the hard work for us, or at the very least provided us with some interesting food for thought. Unfortunately, there is no standard name for what we are looking for. It may be called a class diagram, a domain model, an entity relationship diagram, an information architecture, a data model, or it could just be a scribble on a whiteboard. Regardless of the name, it is the visual appearance that typically catches our attention. Let us look at an example (Figure 4) from the world of motor insurance.

Figure 4: Structural relationships from the world of motor insurance

Whilst it would be possible to define an automatable set of steps to convert the type of information in Figure 4 into a Classification Tree, this kind of mechanical transformation is unlikely to deliver a Classification Tree that fits our needs. A more practical approach is to decide which parts of the diagram we wish to mirror in our Classification Tree and which parts we are going to discard as irrelevant.

The inputs and relationships we select often depend upon the purpose of our testing. Let us look at two Classification Trees that both take inspiration from Figure 4, but greatly differ in their visual appearance. For the purpose of these examples, let us assume that the information in Figure 4 was created to support the development of a car insurance comparison website.

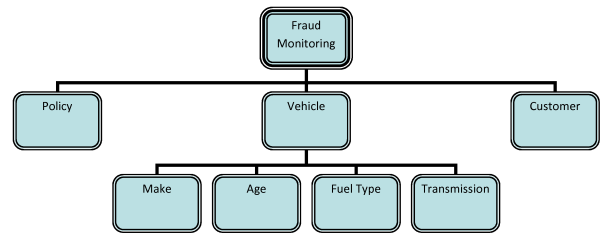

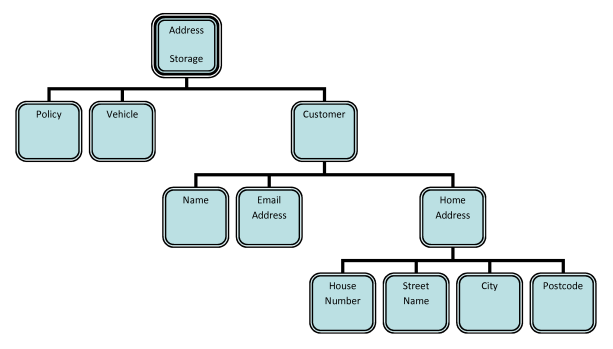

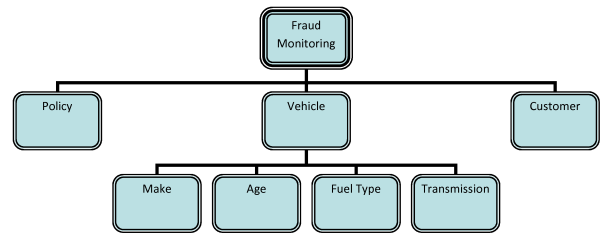

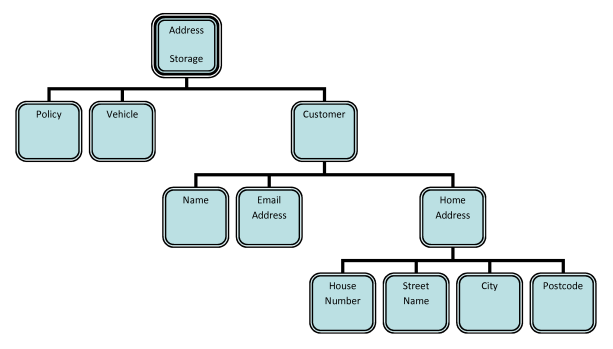

For our first piece of testing, we intend to focus on the fraud monitoring aspect of our website that checks whether the make, age, fuel type and transmission entered for a particular vehicle match those held by the National Car Records Bureau. For our second piece of testing, we intend to focus on the website’s ability to persist different addresses, including the more obscure locations that do not immediately spring to mind. Now take a look at the two classification trees in Figure 5 and Figure 6. Notice that we have created two entirely different sets of branches to support our different testing goals. In our second tree, we have decided to merge a customer’s title and their name into a single input called “Customer”. Why? Because for this piece of testing we can never imagine wanting to change them independently.

Figure 5: Classification Tree to support the testing of fraud monitoring (root and branches only)

Figure 6: Classification Tree to support the testing of address persistency (root and branches only)

Fear not if you rarely encounter a class diagram, a domain model or anything similar. There are many other places we can look for hierarchical relationships. You never know, they may even be staring you right in the face.

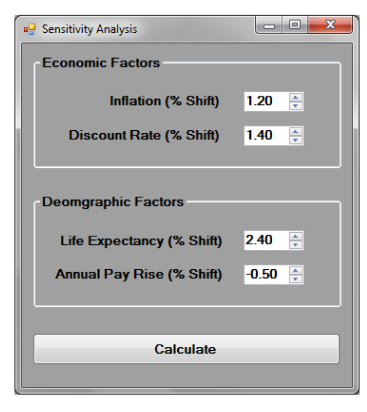

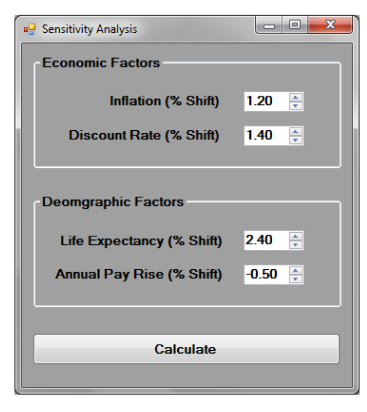

If the software we are testing has a graphical interface, this can be a great place for inspiring the first cut of a Classification Tree. Imagine for a moment that we have been asked to test the sensitivity analysis module of a new pension scheme management system. The premise of the module is simple. How could unexpected economic and demographic events affect the performance of the pension scheme? Based upon discussions with the intended users of the software, these events have been grouped into two categories, which have been duly replicated in user interface design (Figure 7). Now take a look at one possible Classification Tree for this part of our investment management system (Figure 8). In just the same way we can take inspiration from structural diagrams, we can also make use of graphical interfaces to help seed our ideas.

Figure 7 – Graphical Interface for Sensitivity Analysis Module

Figure 7 – Graphical Interface for Sensitivity Analysis Module

Figure 8 – Classification Tree for Sensitivity Analysis Module

Of course, if we only relied on graphical interfaces and structural diagrams to help organise our Classification Trees, there would be a sad number of projects that would never benefit from this technique. There are many other concrete examples we could discuss, but for now I will leave you with some general advice. Anytime that you encounter something that implies a relationship, such as “A is a type of B”, “X is made up of Y” or “For each M there must be at least one N” just ask yourself, would my Classification Tree be easier to draw, communicate and maintain if it mirrored that relationship? If the answer is yes, you are onto a winner.

Applying Equivalence Partitioning or Boundary Value Analysis

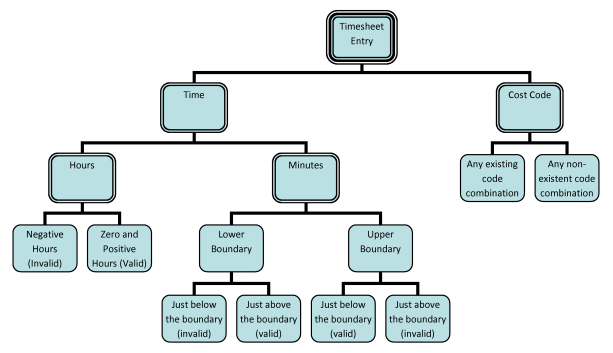

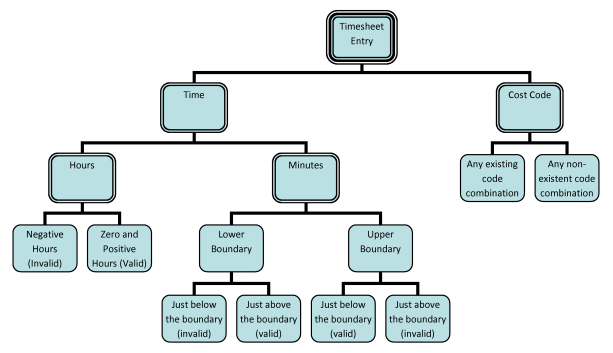

Assuming we are happy with our root and branches, it is now time to add some leaves. We do this by applying Boundary Value Analysis or Equivalence Partitioning to the inputs at the end of our branches. For the Classification Tree in Figure 9 this means the application of either technique to the inputs of Hours, Minutes and Cost Code, but not to the input of Time, as we have decided to express it indirectly by Hours and Minutes.

Figure 9 – Classification Tree to support the testing of timesheet entry (root and branches only)

Equivalence Partitioning focuses on groups of input values that we assume to be “equivalent” for a particular piece of testing. This is in contrast to Boundary Value Analysis that focuses on the “boundaries” between those groups. It should come as no great surprise that this focus flows through into the leaves we create, affecting both their quantity and visual appearance. Identifying groups and boundaries can require a great deal of thought. Fortunately, once we have some in mind, adding them to a Classification Tree could not be easier.

For no other reason than to demonstrate each technique, we will apply Boundary Value Analysis to the Minutes input, and Equivalence Partitioning to the Hours and Cost Code inputs. One possible outcome of applying these techniques is shown below.

Cost Code Input: Two groups

Group 1 – Any existing code combination

Group 2 – Any non-existent code combination

Hours Input: Two groups

Group 1 – Negative hours

Group 2 – Zero or more hours

Minutes Input: 2 Boundaries

Boundary 1 – Lower boundary (zero minutes)

Boundary 2 – Upper boundary (sixty minutes)

Now we have the results of each technique it is time to start adding them to our tree. For any input that has been the subject of Equivalence Partitioning this is a single step process. Simply find the relevant branch (input) and add the groups identified as leaves. This has the effect of placing any groups beneath the input they partition. For any input that has been the subject of Boundary Value Analysis, the process is a little longer, but not by much. In a similar way to Equivalence Partitioning, we must first find the relevant branch (input), but this time it is the boundaries that we need to add as leaves rather than the groups. The process is completed by adding two leaves under each boundary – one to represent the minimum meaningful amount below the boundary and another to represent the minimum meaningful amount above. The complete tree can be seen in Figure 10.

Figure 10: Complete classification tree for timesheet entry (root, branches and leaves)

Specifying test cases

By this stage, if everything has gone to plan we should be looking at a beautiful Classification Tree, complete with root, branches and leaves. It is an exciting milestone, but we still have some big decisions left to make. Most importantly, what are our test cases going to look like?

To specify test cases based upon a Classification Tree we need to select one leaf (a piece of test data) from each branch (an input the software we are testing is expecting). Each unique combination of leaves becomes the basis for one or more test cases. We can present this information in whatever format we like. One way is as a simple list, similar to the one shown below that provides examples from the Classification Tree in Figure 10 above.

Leaf combination 1

Hours = Negative

Minutes = Just above the lower boundary

Cost Code = Any existing code combination

Leaf combination 2:

Hours = Positive

Minutes = Just below the upper boundary

Cost Code = Any existing code combination

Leaf combination 3:

Hours = Negative

Minutes = Just below the lower boundary

Cost Code = Any non-existent code combination

Whilst the list format above is OK for a quick example, presenting this information independently of the associated Classification Tree can make it difficult to see which combinations we have decided to include, compared to those we have chosen to ignore. This in turn makes it harder to see our planned test coverage and also react to any changes to the software we are testing.

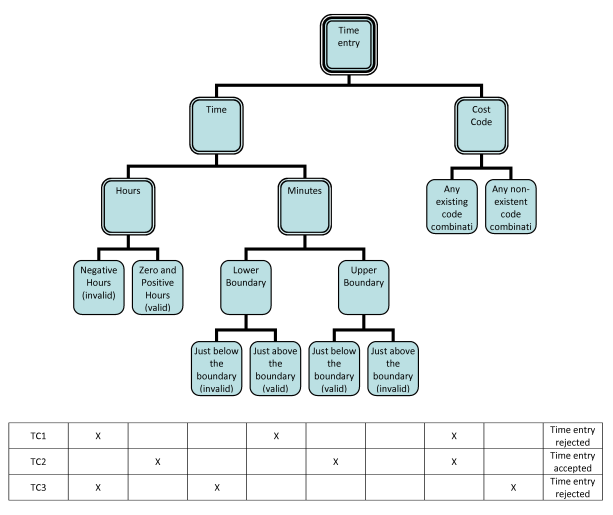

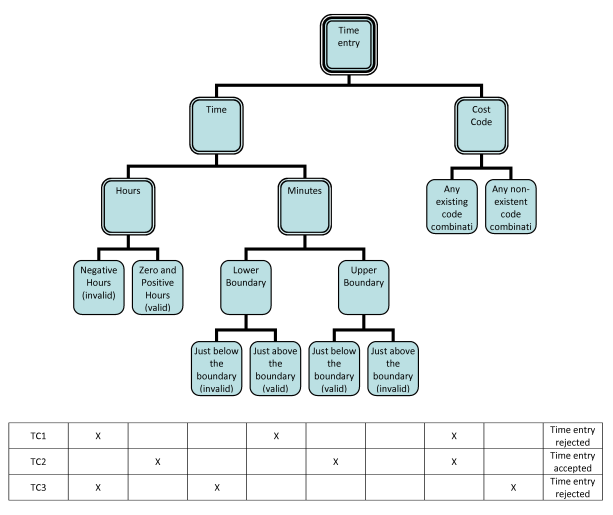

For this reason, a popular method for adding test cases to a Classification Tree is to place a single table beneath the tree, into which multiple test cases can be added, typically one test case per row. The table is given the same number of columns as there are leaves on the tree, with each column positioned directly beneath a corresponding leaf. Additional columns can also be added to preserve any information we believe to be useful. A column to capture the expected result for each test case is a popular choice.

Now we have a decision to make. Are we going to specify abstract test cases or concrete test cases? Or to put it another way, are we going to specify exact values to use as part of our testing or are we going to leave it to the person doing the testing to make this choice on the fly? Like many other decisions in testing, there is no universally correct answer, only what is right for a specific piece of testing at a particular moment in time.

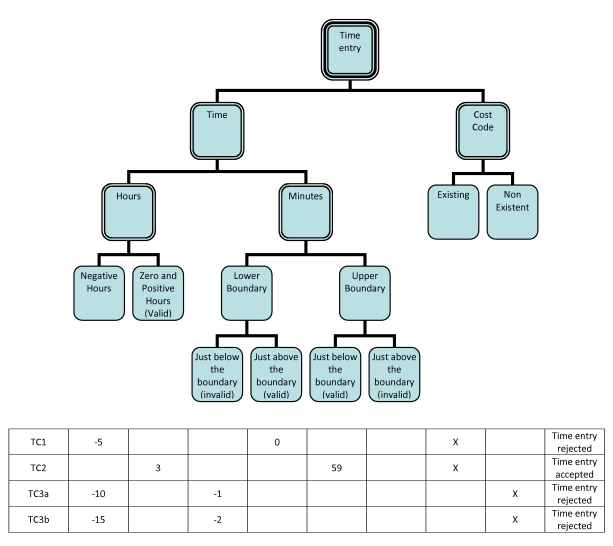

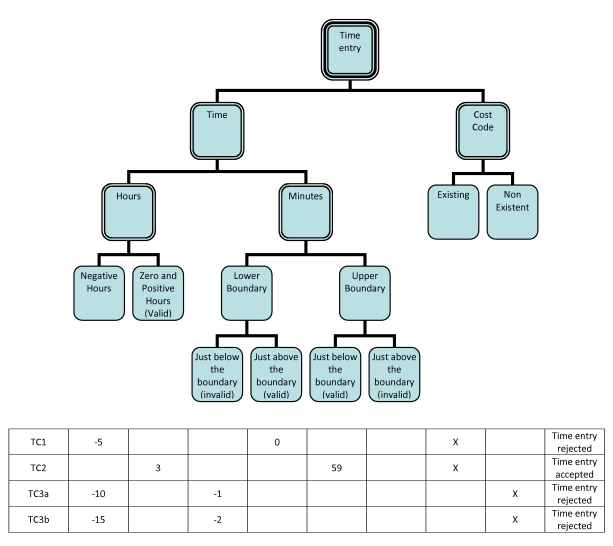

To specify abstract test cases is relatively easy. Each unique leaf combination maps directly to one test case, which we can specify by placing a series of markers into each row of our table. Figure 11 contains an example based upon the three leaf combinations we identified a moment ago.

Figure 11: Abstract Test Cases for timesheet entry

You will notice that there are no crosses in one of our columns. In this particular instance, this means that we have failed to specify a test case that sets the Minute input to something just above the upper boundary. To call it an oversight may be unfair. It may have been a conscious choice. Either way, by aligning our test case table with our Classification Tree it is easy to see our coverage and take any necessary action.

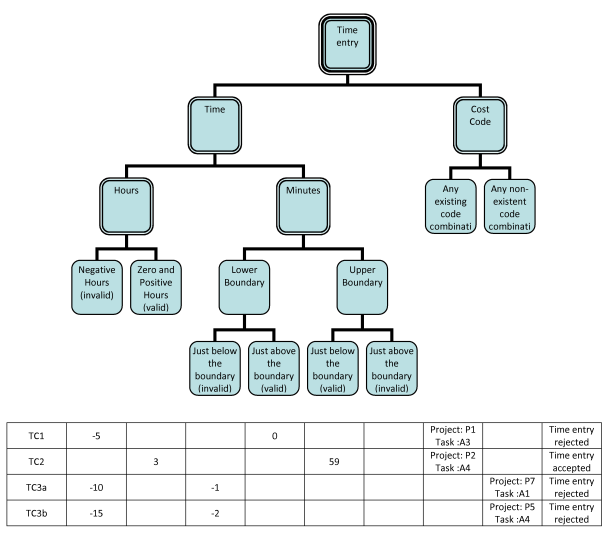

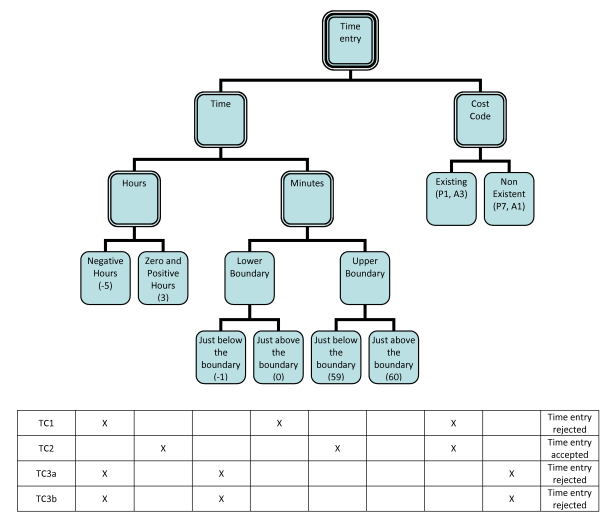

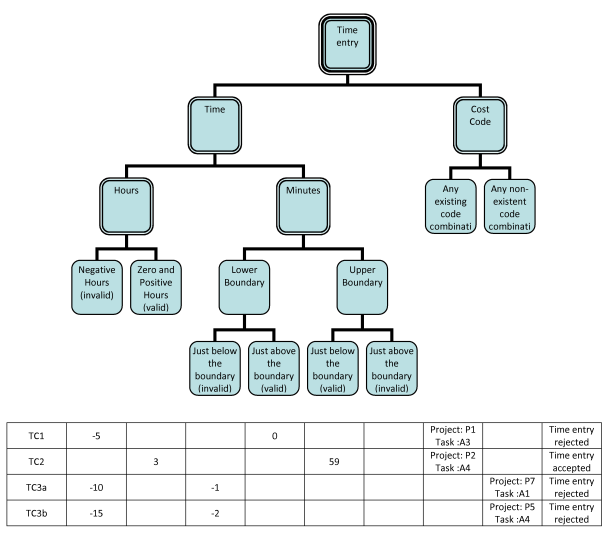

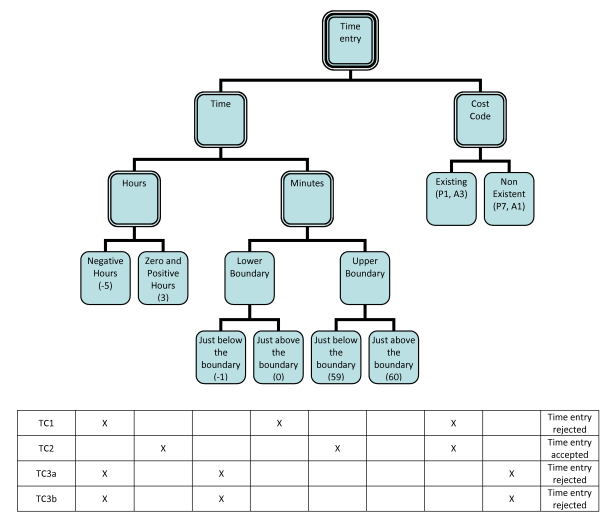

Now we have seen how to specify abstract test cases using a Classification Tree, let us look at how to specify their concrete alternatives. The easiest way to create a set of concrete test cases is to replace the existing crosses in our table with concrete test data. This has the effect of providing exact values for each test case. It also gives us the opportunity to create multiple concrete test cases based upon a single combination of leaves.

Notice in the test case table in Figure 12 that we now have two test cases (TC3a and TC3b) both based upon the same leaf combination. Without adding additional leaves, this can only be achieved by adding concrete test data to our table. It does go against the recommendation of Equivalence Partitioning that suggests just one value from each group (or branch) should be sufficient, however, rules are made to be broken, especially by those responsible for testing.

Figure 12: Concrete Test Cases for timesheet entry

In Figure 12, notice that we have included two concrete values into each cell beneath the Cost Code branch – one for the Project Code input and one for the Task Code input. This is because when we drew our tree we made the decision to summarise all Cost Code information into a single branch – a level of abstraction higher than the physical inputs on the screen. Now we have made the switch to concrete test cases, we no longer have the luxury of stating that any existing code combination will do. We must provide exact test data for each input and adding multiple values to a cell is one way to accomplish this goal. An alternative is to update our Classification Tree to graphically represent the Project Code and Task Code as separate branches, however, this would result in a larger tree which we may not necessarily want. There is, of course, a compromise. There is nothing to stop us from specifying part of a test case at an abstract level of detail and part at a concrete level of detail. The result can be the best of both worlds, with greater precision only included where necessary.

Figure 13: Hybrid Test Cases (half concrete value, half abstract values) for timesheet entry

One final option is to place the concrete test data in the tree itself. Notice how in the Figure 14 there is a value in brackets in each leaf. This is the value to be used in any test case that incorporates that leaf. It does mean that we can only specify a single concrete value for each group (or a pair for each boundary) to be used across our entire set of test cases. If this is something that we are satisfied with then the added benefit is that we only have to preserve the concrete values in one location and can go back to placing crosses in the test case table. This does mean that TC3a and TC3b have now become the same test case, so one of them should be removed.

Figure 14: Concrete Test Cases (with values in leaves) for timesheet entry

Brainstorming test cases first

In much the same way that an author can suffer from writer’s block, we are not immune from the odd bout of tester’s block. Drawing a suitable Classification Tree on a blank sheet of paper is not always as easy as it sounds. We can occasionally find ourselves staring into space, wondering what branch or leaf to add next, or whether we have reached an acceptable level of detail.

When we find ourselves in this position it can be helpful to turn the Classification Tree technique on its head and start at the end. One of the benefits of the Classification Tree technique is that it allows us to understand the coverage of a number of interrelated test cases, but by creating a Classification Tree first we assume (possibly without even realising) that we have a vague idea of the test cases we ultimately plan to create. In reality, this is not always the case, so when we encounter such a situation a switch in mind-set can help us on our way.

In other walks of life people rely on techniques like clustering to help them explore concrete examples before placing them into a wider context or positioning them in a hierarchical structure. You would be forgiven for thinking that a Classification Tree simply provides structure and context for a number of test cases, so there is a lot to be said for brainstorming a few test cases before drawing a Classification Tree. Hopefully we will not need many, just a few ideas and examples to help focus our direction before drawing our tree.

It is worth mentioning that the Classification Tree technique is rarely applied entirely top-down or bottom-up. In reality, the outline of a tree is often drawn, followed by a few draft test cases, after which the tree is pruned or grown some more, a few more test cases added, and so on and so on, until finally we reach the finished product. But our work does not stop here. Due to their style, Classification Trees are easy to update and we should take full advantage of this fact when we learn something new about the software we are testing. This often occurs when we perform our test cases, which in turn triggers a new round of updates to our Classification Tree.

Pruning a cluttered tree

As we draw a Classification Tree it can feel rewarding to watch the layers and detail grow, but by the time we come to specify our test cases we are often looking for any excuse to prune back our earlier work. Remember that we create Classification Trees so that we may specify test cases faster and with a greater level of appreciation for their context and coverage. If we find ourselves spending more time tinkering with our tree than we do on specifying or running our test cases then maybe our tree has become too unwieldy and is in need of a good trim.

If we have chosen to represent one or more hierarchal relationships in our tree, we should ask ourselves whether they are all truly necessary. A tree with too much hierarchy can become difficult to read. By all means, we should add hierarchal relationships where they improve communication, but we should also aim to do so sparingly.

If Boundary Value Analysis has been applied to one or more inputs (branches) then we can consider removing the leaves that represent the boundaries. This will have the effect of reducing the number of elements in our tree and also its height. Of course, this will make it harder to identify where Boundary Value Analysis has been applied at a quick glance, but the compromise may be justified if it helps improve the overall appearance of our Classification Tree.

If we find ourselves with a Classification Tree that contains entirely concrete inputs (branches), we should ask ourselves whether we need that level of precision across the entire tree. We may find that some inputs have been added out of necessity (such as mandatory inputs) and potentially indirectly related to our testing goal. If this is the case we can consider combining multiple concrete branches into a single abstract branch. For example, branches labelled “title”, “first name” and “surname” could be combined into a single branch labelled “person’s name”. A similar merging technique can also be applied (to both concrete and abstract) branches when we do not anticipate changing them independently.

Implicit test cases

When we find ourselves short of time there is always the option of forfeiting the ubiquitous test cases table for something that requires the bare minimum of effort. Rather than using a tabular format (as shown in the previous section) we can instead use a coverage target to communicate the test cases we intend to run. We do this by adding a small note to our Classification Tree, within which we can write anything we like, just as long as it succinctly communicates our target coverage. Sometimes just a word will do, other times a more lengthy explanation is required. Let us looks at an example to help understand the principle.

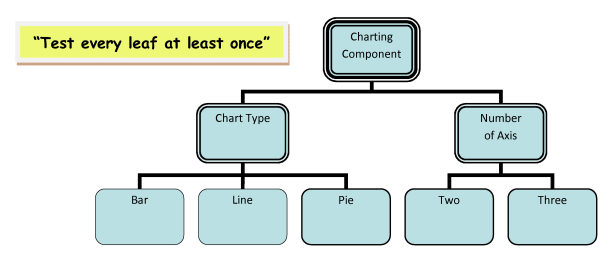

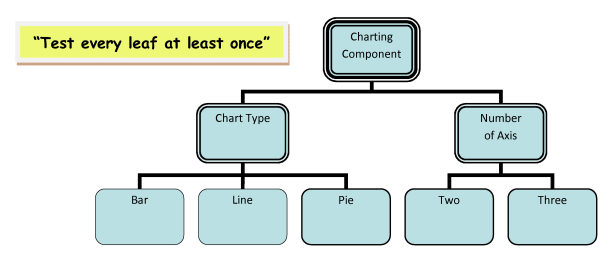

Imagine for a moment that we are testing a new charting component that can display data based on either two or three axis. It is the first time the charting component has ever been tested so with the intention of finding any obvious problems as quickly as possible we decide to draw a Classification Tree (Figure 15) around two of the broadest inputs we can control – the type of chart (bar, line or pie) and the number of axis (two or three).

We now need to decide what test cases we intend to run, but rather than presenting them in a table, we are going to express them as a coverage target. Remember, in this example we are not looking for a thorough piece of testing, just a quick pass through all of the major features. Based upon this decision, we need to describe a coverage target that meets our needs. There are countless options, but let us take a simple one for starters; “Test every leaf at least once”.

Figure 15 – Classification Tree for charting component (with coverage target note)

That’s it. We have now defined our test cases (implicitly) for this piece of testing. But how do we (or anyone else) know what test cases to run. We know by applying the coverage target in real-time as we perform the testing. If we find ourselves missing the test case table we can still see it, we just need to close our eyes and there it is in our mind’s eye. Figure 16 below shows one possible version of our implied test case table.

Figure 16 – Classification Tree for charting component (with example test case table)

Now imagine for a moment that our charting component comes with a caveat. Whilst a bar chart and a line chart can display three-dimension data, a pie chart can only display data in two-dimensions. With our new found information, we may decide to update our coverage note; “Test every leaf at least once. Note, a pie chart cannot be 3D”.

As we interact with our charting component this coverage note can be interpreted in two ways. As we go about testing every leaf at least once, we may avoid a 3D pie chart because we know it is not supported. Conversely, we may acknowledge that a 3D pie chart is not supported, but try it anyway to understand how the component handles this exception. Leaving this choice until the moment we are testing is not necessarily a bad thing, we can make a judgement call at the time. However, if we want to be more specific we can always add more information to our coverage note; “Test every leaf at least once. Note, a pie chart cannot be 3D (do not test)”.

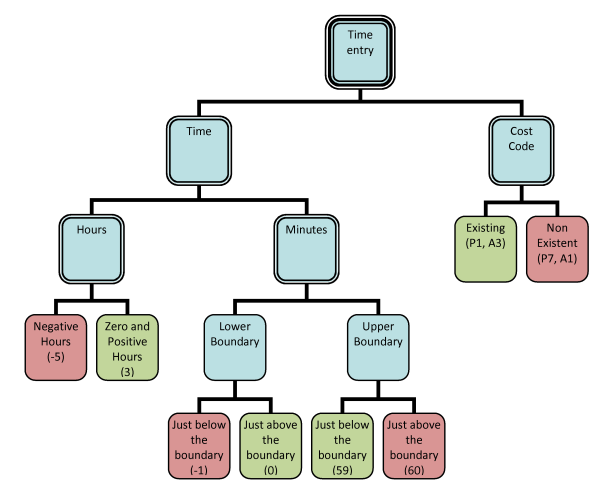

Colour-coded Classification Trees

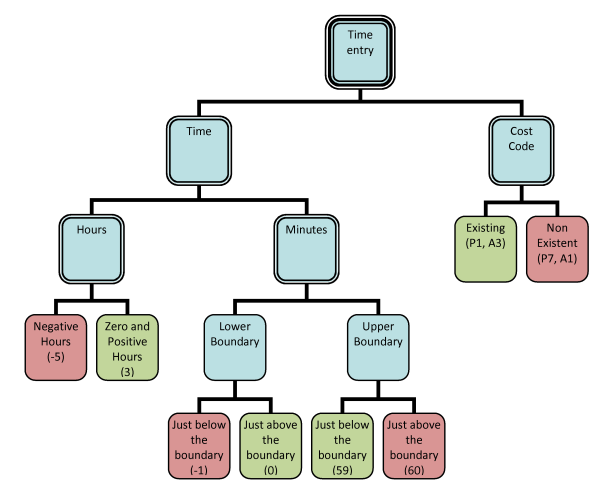

Whilst not always necessary, a splash of colour can make a great addition to a Classification Tree. It allows us to add an extra dimension of information that would not be possible if we stuck to a single colour.

A popular use of colour is to distinguish between positive and negative test data. In summary, positive test data is data that we expect the software we are testing to happily accept and go about its merry way, doing whatever it is supposed to do best. We create test cases based on this kind of data to feel confident that the thing we are testing can do what it was indented to do. Imagine a piece of software that will tell you your age if you provide your date of birth. Any date of birth that matches the date we are testing or a date in the past could be considered positive test data because this is data the software should happily accept.

Negative test data is just the opposite. It is any data that the thing we are testing cannot accept, either out of deliberate design or it doesn’t make sense to do so. We create test cases based on this kind of data to feel confident that if data is presented outside of the expected norm then the software we are testing doesn’t just crumble in a heap, but instead degrades elegantly. Returning to our date of birth example, if we were to provide a date in the future then this would be an example of negative test data. Why? Because the creators of our example have decided that through a deliberate design choice it will not accept future dates as for them it does not make sense to do so.

Combining these concepts with a Classification Tree could not be easier. We just need to decide whether each leaf should be categorised as positive or negative test data and then colour code them accordingly. A colour coded version of our timesheet system classification tree is shown in Figure 17. Positive test data is presented with a green background, whilst negative test data is presented with a red background. By marking our leaves in this way allows us to more easily distinguish between positive and negative test cases.

Figure 17: Colour-coded Classification Tree for timesheet entry

Summary

In this chapter, we have seen;

– How it is useful to consider the growth of a Classification Tree in 3 stages – the root, the branches and the leaves.

– How inspiration for Classification Trees can be taken from decomposing processes, analysing hierarchical relationships and brainstorming test ideas.

– How Classification Trees can support the creation of abstract test cases, concrete test cases and a mixture of both.

– How to identify Classification Trees that may benefit from pruning.

– How to implicitly preserve and communicate test cases with coverage target notes.

– How to add colour to a Classification Tree to improve communication.